In the era of large language models (LLMs), knowledge has become one of the most intensively studied topics in natural language processing (NLP). In this post, I introduce trends from scientific papers about knowledge and NLP published in 2023. For this post, I analyzed papers from the following conferences. I didn’t target journals due to their longer review cycles.

Extract keyphrases

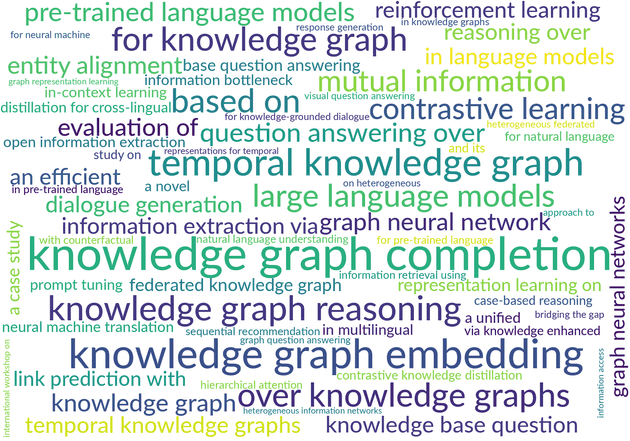

First, I applied keyphrase extraction to the titles of the papers. I calculated the normalized pointwise mutual information (nPMI) values for all the n-grams (up to 3-grams) in the titles. Then, I selected keyphrases with nPMI values above 0.5.

Next, let’s examine some trends.

Knowledge and Language models

Among the many topics surrounding knowledge and language models, injecting knowledge is one of the most intensively studied. The studies can be broadly categorized into two groups:

- Injecting knowledge to LLMs

- Discovering knowledge in LLMs

Injecting knowledge to LLMs is necessary for vaiours knowledge-intensive tasks such as knowledge-grounded dialogue and knowledge base question answering. Retrieval Augmented Generation (RAG) is a popular approach for this, as discussed in ( Yu et al., Hofstätter et al., Zhang et al. (a) )

Besides RAG, other knowledge injection methods have been proposed. “Plug-and-play”, injecting knowledge from models trained on downstream tasks, has shown high performance on knowledge-intensive NLP tasks ( Zhang et al. (b) ) . Pre-training or re-training is also effective for acquiring knowledge. Zhang et al. (c) study methodologies for LLMs to learn new knowledge through training

Considering real-world applications, updating existing knowledge is crucial. Zhong et al. propose a benchmark for knowledge editing, which tests the capability of LLMs to update knowledge in a knowledge base. Jang et al. study the removal of certain knowledge from LLMs. They propose a post hoc method to remove sensitive knowledge from LLMs without significant performance degradation.

Disconvering knowledge in language models is also a hot topic. Some studies demonstrate that LLMs encode knowledge of cultural moral norms (Ramezani and Xu.) and social knowledge (Choi et al.). However, possessing knowledge doesn’t always mean that LLMs are grounded in it. Yao et al. report an interesting phenomenon where LLMs may give incorrect answers even when knowledge probing indicates that the knowledge is encoded in the language model.

Knowledge Graph Completion

Knowledge graph completion (KGC) is a task aimed at predicting missing relations between entities in a knowledge graph. Many papers propose knowledge graph completion methods (Li et al.), (Xu et al.), (Ott et al.),

Some authors propose completion methods for specific types of knowledge graphs. Tan et al. study KGC for sparse graphs, where entities have few relations. They propose Knowledge Relational Attention Network and Knowledge Contrastive Loss to enrich informaiton about sparse entities and relations. Ren et al. propose a KGC method for temporal knowledge graphs. Their method models temporal relations between entities and introduces a self-attention layer between entities and between relations, helping the model understand the graph structure.

Knowledge graphs tend to be very large, so throughput is crucial for knowledge graph completion. There are several works proposing efficient methods for KGC. GreenKGC by Wang et al is a lightweight approach. Shang et al. propose a small KGC model while maintaining high accuracy. From an evaluation perspective, Bastos et al. propose a lightweight evaluation metric for knowledge graph completion. Their metric, Knowledge Persistence, correlates with ranking-based metrics such as MRR, although it is much faster to compute.