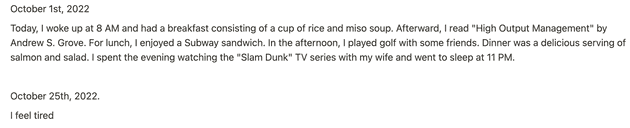

Everyone would agree on the importance of keeping a diary, but they would also acknowledge the challenge of maintaining motivation to write one. For me, the biggest issue was the difficulty in recalling what I did during the day when writing a diary at night, which ultimately led to a loss of motivation.

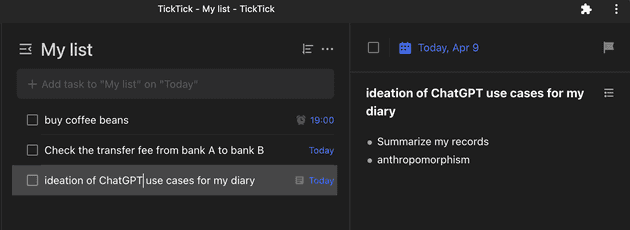

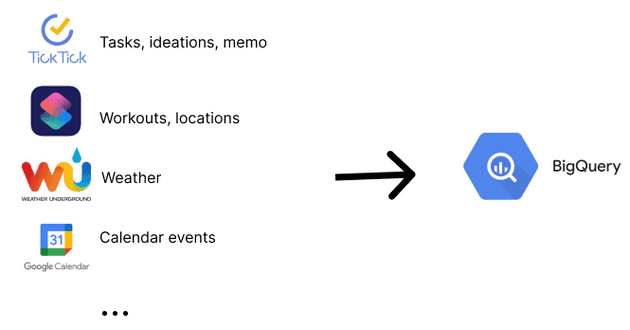

One day, I had an epiphany that I had been unknowingly maintaining another diary: I consistently use my TODO list app to manage most stuff of my daily life, and a list of tasks marked as completed in a day well depicts what I did during that day! Additionally, the day’s weather can be obtained from other data sources. I keep my schedule on Google Calendar. I realized that aggregating this information could create a comprehensive diary entry for the day.

Following this observation, I began to automatically keep my diary by storing this data. This blog post introduces the automation pipeline for achieving this.

Storage: BigQuery

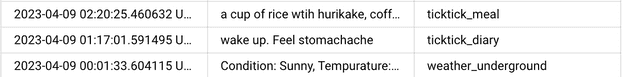

I use BigQuery for data storage. Although it’s not specifically optimized for diary purposes, you can build a viewer on top of it. (I’m OK to view my diary by querying BigQuery.) BigQuery is also useful when integrating with various data sources.

Data Flow

I developed a simple Python function using cloud functions to store data in my BigQuery. When a trigger (such as task completion, weather broadcast, or CarPlay activation) is activated, automation tools send an HTTPS request to the function with the trigger information. The function then writes the data to BigQuery. All the services used in this process are serverless, meaning that there is no need to keep a compute instance running continuously.

And.. that’s it!

Future work

Some people may argue that it is not a “diary” entry but rather a collection of data records. While I understand this perspective, I believe that just summarizing the data into a natural language paragraph would result in a more natural diary entry. I think generative AI would be well-suited for this task, and I plan to give it a try in the near future!